From time to time, in discussion groups some fellow hams start worring about the voltage drop on the radio readout.

This is from a Xiegu G90 group:

> However, the 0.9 voltage difference was still there. I am fairly sure now the

> difference is due either to inaccuracy of G90 volt meter. (I do know it reads

> 0.2 volts low in receiver mode) or there is something internal to the G90

> causing the drop

I think some theory must be exposed to help users to understand what it is about the voltage readings in these radios (and others as well).

The voltage is measured with an ADC (Analog to Digital Converter) which "translates" variable voltages into digital variables.

One important thing to understand is "resolution" which is the lenght of the number that store the analog voltage value.

In our case, the ADC input of STM32F4xx can operate in 6-bit, 8-bit, 10-bit, and 12-bit configurable resolution.

Another important value is the maximum voltage that can be applied to the ADC input, which, in our case is 3V3.

Based on the datasheet of the uC, we can safely assume that the voltage is measured in 8-bit resolution (best resolution without some tricks that involve supplemental processor cycles which are precious because they are time-consumer in a uC which also have to do DSP things),

Resolution = ( Operating voltage of ADC ) / 2^(number of bits ADC).

Therefore, in our case:

Resolution = 3.3V/2^8 = 3.3/255 =12 mV.

This are the "steps" in which the voltage is measured in 8 bit resolution.

BUT! There is a big caveat here...

We cannot measure with this resolution the input voltage as is much over the 3.3V after which the input of the ADC will be destroyed!

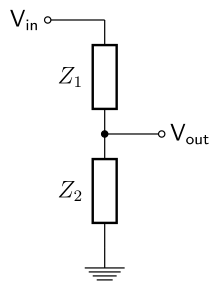

So we put in line a voltage divider!

The divider will have to accept at least 20V (because the radio accepts input as much as 17V in normal operation.

Let's find out, what is the voltage divider ratio in our radio...

If we look on the schematic, Xiegu G90, the voltage divider is made with R63B and R67B 3.3KOhm and 470 Ohm respectively, which gave a ratio of 1:8 which means the resolution of the internal voltmeter is 0.096 (roughly 100mV) and the maximum voltage is 26.4 V!

So, any variation in the input voltage of more than 101 mV will be shown as a ... surprise, 200mV or 0.2V!

Simply said, the radio cannot show variations less than 0.2V!

As for the big variations when transmitting, again, from the schematic we can observe that the whole PARF components are tied to +13V.

The voltage tap used to measure the voltage is well beyond some components that will present a certain resistivity:

-power cord;

-fuse receptacle;

-RFI choke with both ground and positive leads;

-two MOSF-FETs used for reverse polarity protection and PowerON.

So, a 0.2-0.5 Ohm is a decent value for all of these and all of the above could explain the voltage swing measured by the internal DMM.

I think this will give a reason to enjoy the radio without worring about that voltage readout!

Cheers,

Adrian

No comments:

Post a Comment